How to never lose your GA4 data

Use this guide and learn how to store your Google Analytics 4 data forever using Google Cloud Platform and BigQuery, and why it's not as scary or as complicated as it sounds.

Keep reading and learn the following:

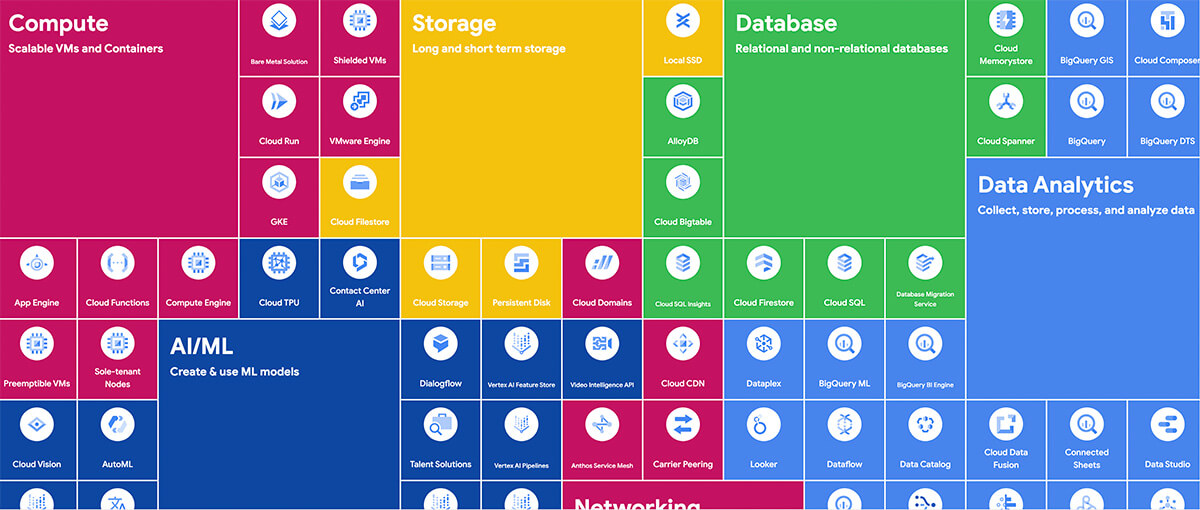

It's worth noting that Google Cloud Platform covers much more than data – including web and app development and analytics pipelining. in GCP; if you think it, you can do it (pretty much).

Lacë Rogers, Head of Analytics and Data

1. What is GCP?

The Google Cloud Platform is a suite of cloud computing products which use the same infrastructure as Google's end-user services.

These resources provide tools to ingest data, run applications, perform analysis and much more. Each tool has separate pricing, which sits under a single billing account.

So why are we talking about GCP when we're looking at GA4? GA4 has a free daily dataset which allows you to store your GA4 event and user level data persistently. Previously this was an optional feature. However, following the quota limits enforcement in November resulted in most API calls failing.

BigQuery is a service which sits in the Google Cloud Platform and provides a scalable analytics storage solution and the only tool you can export your GA4 data to. To always retain the GA4 data, you need to embrace the world of GCP.

GCP services for data

GCP is a collection of tools that support website development, from advanced analytics to machine learning.

This guide focuses on data analytics, showing you how to connect and keep your GA4 data.

The Google Cloud platform is growing continuously, and this guide focuses on data analytics and database products. An excellent example of product growth is Looker Studio becoming part of the GCP family. This inclusion means analysts can expect more alignment with GCP IAMs and tools shortly.

Before discovering how to secure your GA4 data, it's worth noting that GCP covers so much more than data – including web and app development and analytics pipelining – in GCP; if you think it, you can do it (pretty much).

See the Google Cloud Cheat Sheet.

How much will your organisation spend?

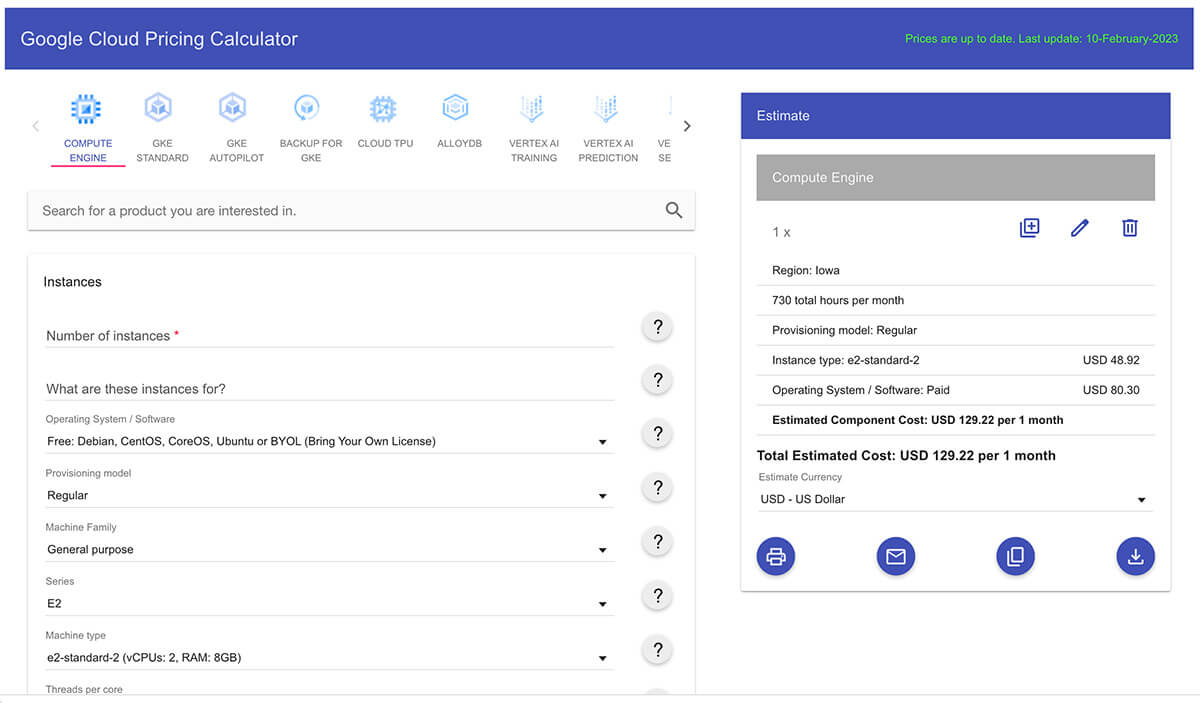

Spend levels are often a blocker to entry for some, and it's often scary getting started, but you can predict and easily manage them.

Google has a straightforward pricing calculator to help you establish the cost of the GCP services you wish to use.

GCP also provides free quotas on projects each month, including 1TB of BigQuery queries and 10GB of active and long-term storage.

Thankfully, there's a lot of free data before you start getting charged.

Another free quota is for Cloud Storage, which provides 5GB of free monthly storage.

Key benefits

- Scalable pricing – you only pay what you use.

- Track spending - you can easily track spending in the console and via the billing exports.

One of the top questions we are often asked is how much it will cost and whether it will be expensive.

GCP has general free allowances, which reset every calendar month and is a pay-for-what-you-use service. Often GA4 BigQuery users will rarely exceed $50 a month, even when connecting their Looker Studio dashboards.

However, it is essential to remember that connections on visualisation tools or via analytics programming like Python to BigQuery will still see a cost associated.

Learn more: Google Cloud pricing

Where is your data stored?

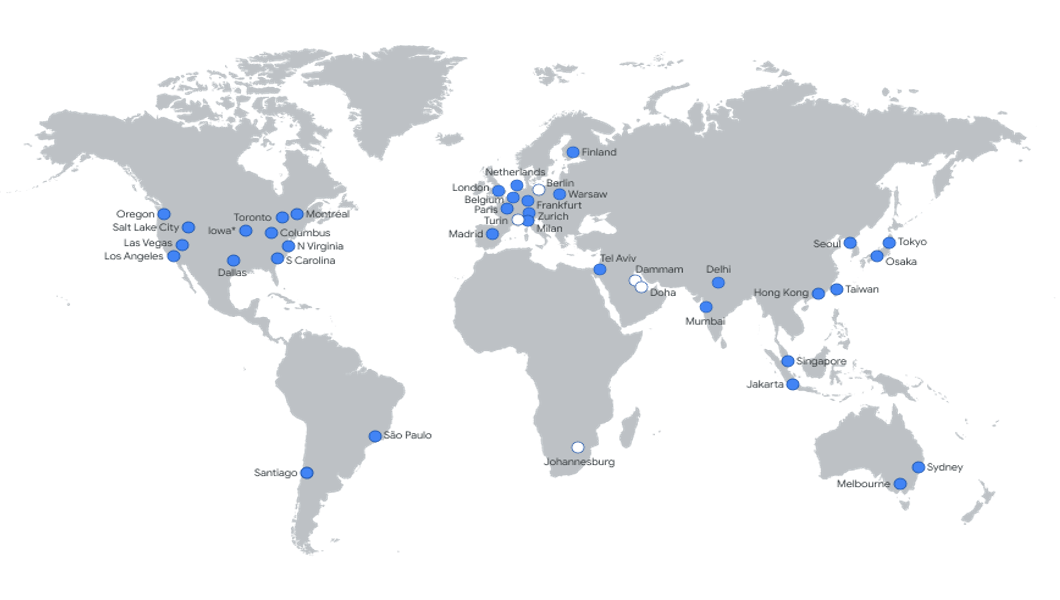

It's on the cloud but still processed and stored in physical locations.

Key points:

- Choose a region where your head office is based.

- Pricing can vary slightly, and disaster recovery policies differ.

- Locate your BigQuery datasets in the same region.

Whilst we mention the cloud quite often, it's important to remember that while your data is in the cloud and you access it remotely, it is physically stored in a server centre. You cannot query data across regions.

We recommend defining your database best practice to run and process data in a region which meets your GDPR and business compliance.

Usually, this will be the location of the head office. Remember, US data is subject to US laws. Data is stored in:

- 35 regions

- 106 zones

- 176 network edge locations

- Available in 200+ countries

Learn more: BigQuery locations

What is BigQuery or GBQ?

BigQuery (or GBQ) is a powerful group of services which cover analytics, including SQL, data storage (relational databases) and secure data management.

BigQuery can act as a storage solution for your GA4 data.

Users use the service to host and provide reporting tables for tools like Looker and Power BI, machine learning and other advanced analytics. It can also be used as a data lake to feed into your business's preferred warehousing solution, e.g. to AWS or Azure.

It offers the following:

- Scalable to your business: A fully-managed, serverless data warehouse that enables scalable analysis over petabytes of data. It's not just for GA4 data.

- Analysis: Descriptive and prescriptive analysis uses include business intelligence, ad hoc analysis, machine learning, and much more.

- It stores data: BigQuery stores data using columnar storage, e.g. it looks like excel sheets. What's more, you can preview most tables without programming.

- Secure management: The tool provides centralised management of data and compute resources that are secured using Identity and Access Management (IAM).

Learn more: What is BigQuery?

So why is it important?

You can only export your event and user level data to BigQuery.

Historically, only UA360 customers had a free GBQ integration to get their hit level data, with Firebase users having access to the event and user level data.

Since the onset of GA4, this has been made available to all users.

Key facts

- You cannot historically back-run the export - so you will lose that data.

- Once connected, it is stored forever until you choose to delete it.

- If you have 50k (of events) happening daily, you would expect to pay around $10 per month to keep your GA4 data.

- Even if you don't use it now, you will likely have to in the future.

UA reporting has always relied heavily on the aggregated API; however, the GA4 BQ dataset opens up many opportunities to customise and understand your customer's behaviour and ensure you treat them fairly and optimise their journeys. The app industry has been privileged to have had the Firebase GBQ dataset, which ultimately opened the door to data-driven decisions and customised business reporting.

For example: Identifying audiences who could be viral and advertising against these users as 'Look-a-like' audiences, or identifying what users commonly do on their first session and how to keep them engaged. The potential is endless. But if you don't connect it, you'll lose it.

Important!

Google currently has no plans to ingest UA data into GA4, so using BigQuery is a great solution to join your historical data.

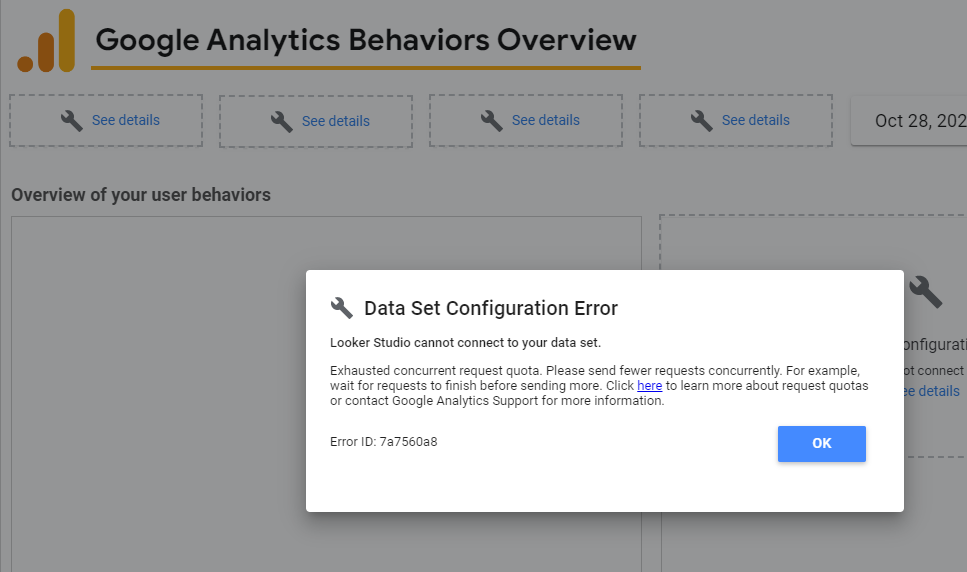

Quota limits

Google has enforced quota limits on the API.

- Only ten concurrent request limits

- Only 1.25K of token slots

- GA4-360 have higher quotas

- You cannot increase these limits

Additionally, GA4 has enforced quota limits, which means it is now harder to use the API for reporting. Ultimately the best solution is to use your GA4 GBQ dataset.

See our blog: Seven steps to beating the GA4 API quota limits.

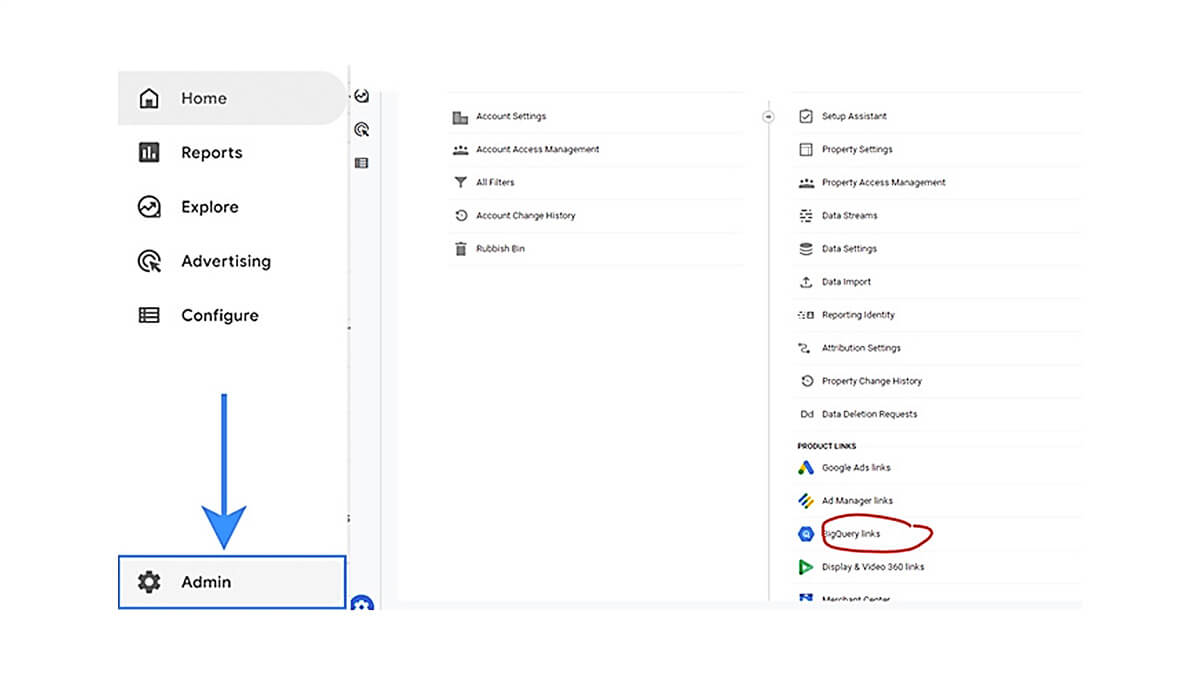

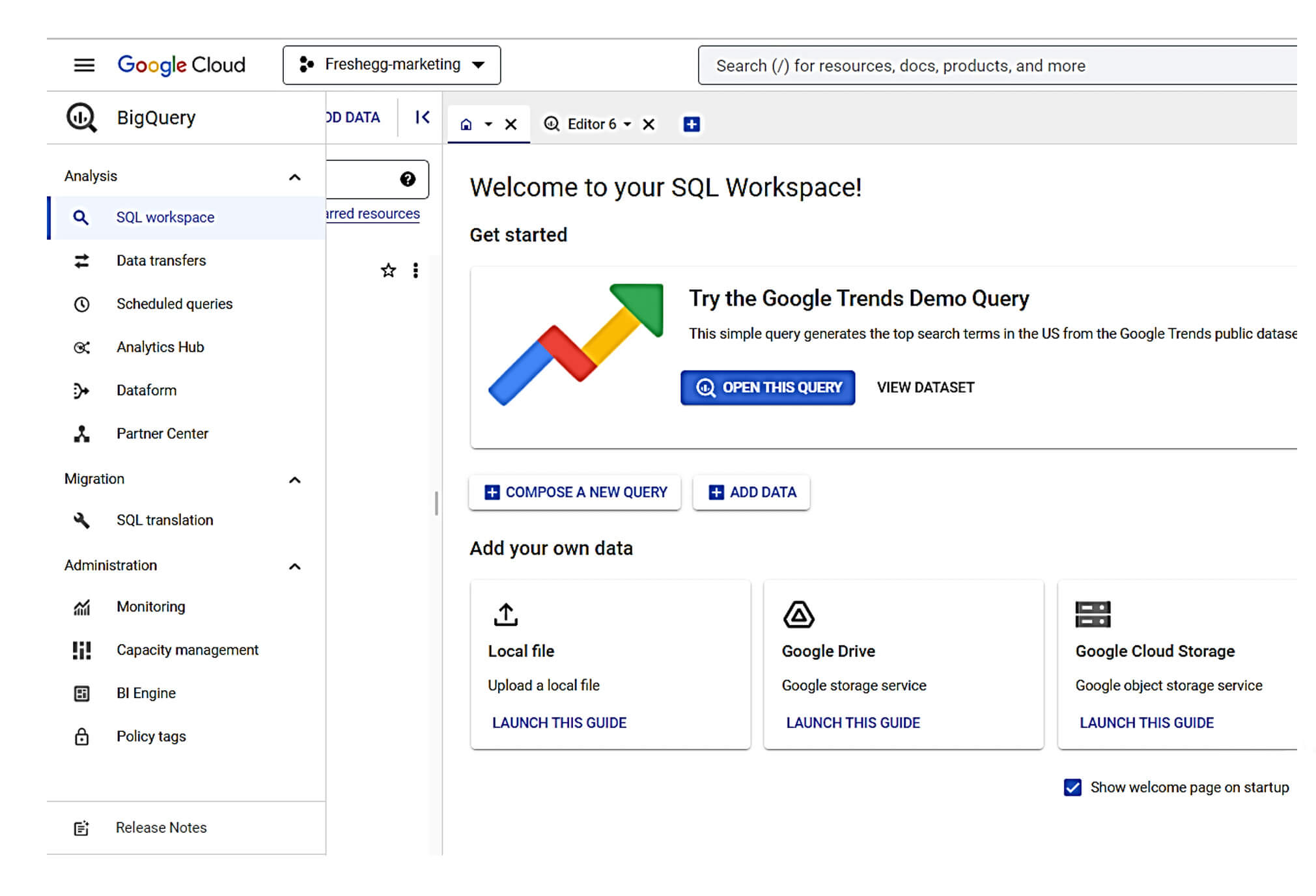

2. How to connect your GA4 account to GCP

There are (only) four steps to begin collecting GA4 data, starting with ensuring you have the proper access levels.

- Step 1: Create a GCP project and connect a billing account (you will get $300 credit when setting this up for the first 90 days, which is an excellent time for your team to onboard to GBQ or implement your pipelining solution)

- Step 2: Navigate to GA4 admin and select BigQuery links

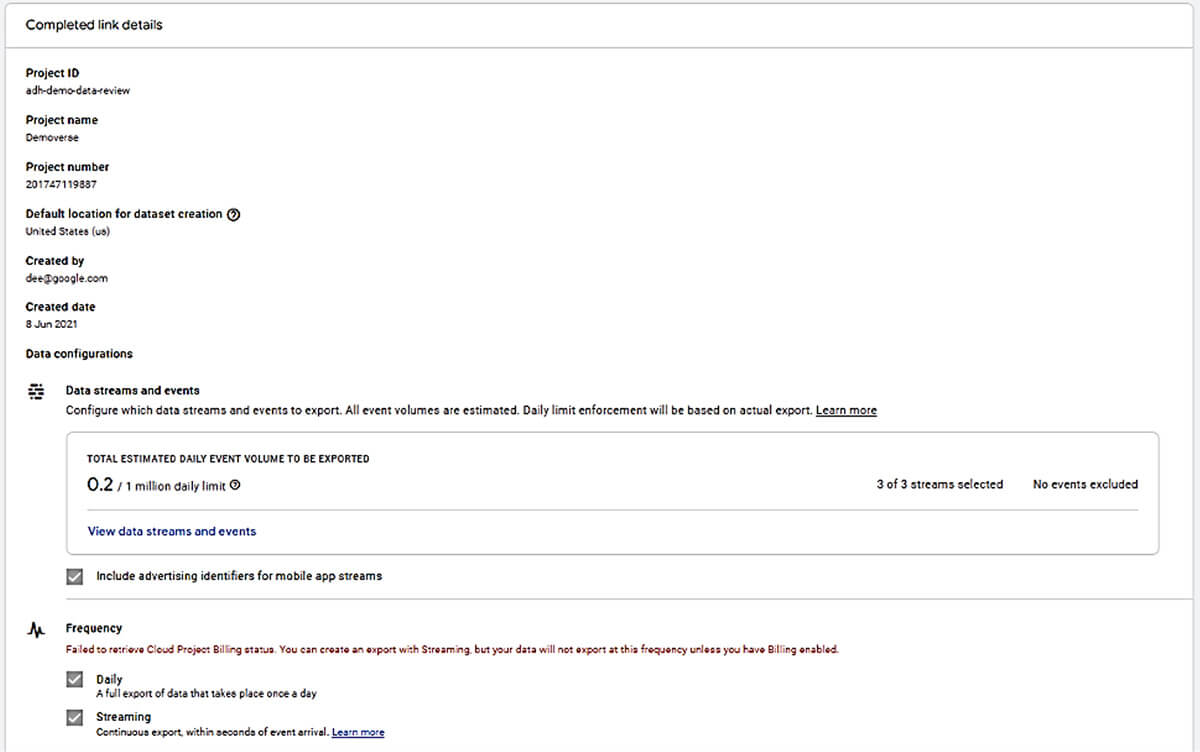

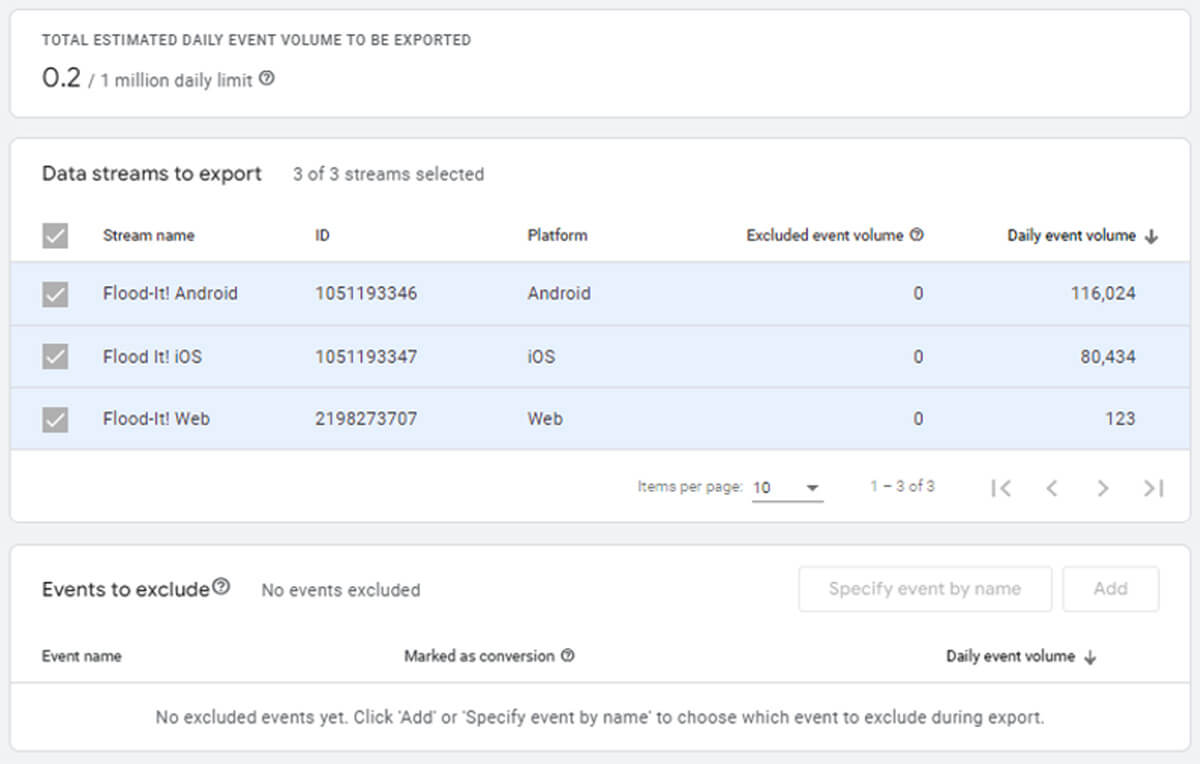

- Step 3: Configure data streams and events

- Step 4: Verify your service account is added with BigQuery/user role

However, if you have over one million events per day, this process will need more refinement and events excluded from the data stream. This process requires careful consideration but is vital because if you go over one million rows, you will lose all data.

We recommend excluding high volume low-value events, which are custom dimensions in GA4.

See our blog: How to set up a GA4 BigQuery export.

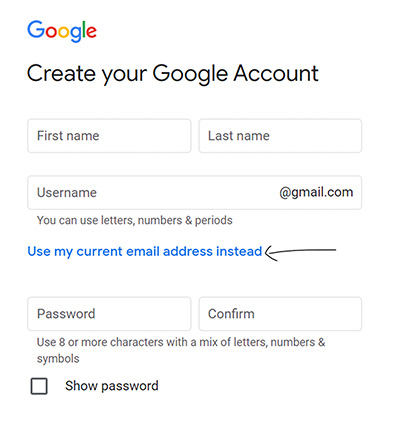

Set up your Gmail address

Before proceeding, you will need to set up your Gmail address and billing account.

- If your team has a Google workspace account, use this email address.

- If you have a non-Google company email, use this link, and select use my current email address to get Google access.

- Now head to https://cloud.google.com/, where it will take you through the steps to create your billing account and "first project".

💡Top tip

If your team has Google identities or workspace, check if you already have any organisation or billing account set up, as you can centralise management of these easily.

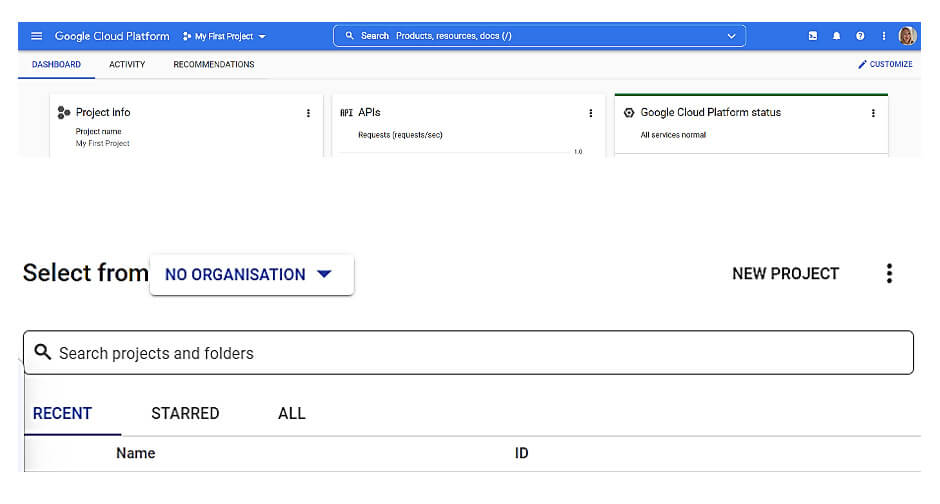

Set up your GCP project

Now you will set up your project. You will need to consider the following:

- Defining your project name.

- The organisation you wish to store data in (if you have one).

- The region you want to store data in

- Whom you would like to access the data initially

💡Top tip

Always add two owners (you can delete yourself). Also, consider who would need access to the project.

Once you have set up your project, navigate to the admin panel of GA. Remember, you must have at least the 'editor' permission to do this.

Learn more: Create a Google Cloud project

💡Top tip

Consider the naming convention and the reason for the GBQ project – e.g. you can add other sources, so calling it 'GA4–project' may not be the best idea.

Manage the configuration

If you are using GCP sandbox mode, you will not be able to set up the real-time streaming tables.

Also, be mindful that if your daily export shows over one million records, you will need to filter the data stream and exclude non-integral events for future analysis.

You must select the region you are exporting to and whether to include advertising identities.

Your GA4 export will not currently include cost, search or other integrations available in GA4.

You can then select varying streams to export, for example, excluding staging app streams from your export or choosing which events to exclude.

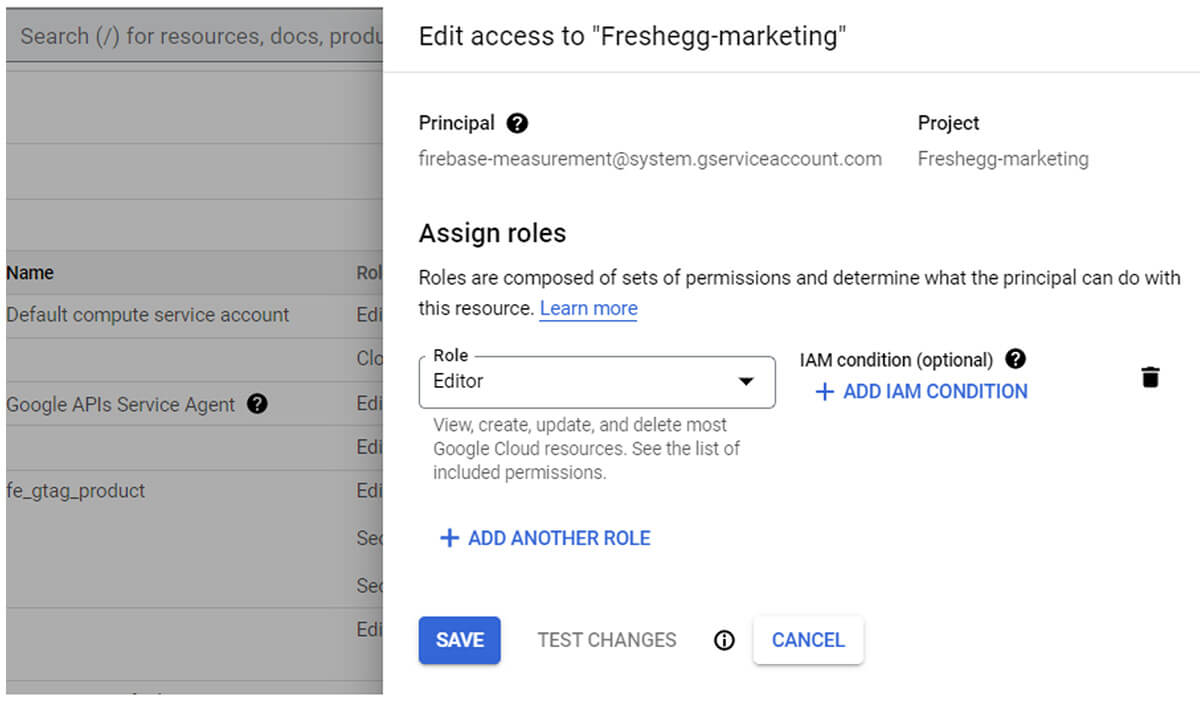

Finally, check the firebase measurement service account is set up and downgrade the access to BigQuery admin.

This is called Firebase because it uses a protocol initially developed by Google for Firebase.

Things to remember

- You must have admin access to Google Analytics and editor-level access to GCP.

- If this is your first billing account or project, you get a $300 allowance for the first 90 days.

- You can only export up to 1 million rows of data per day, but you can exclude specific events if you’re likely to exceed this.

- You cannot back-run the user and event level tables at present.

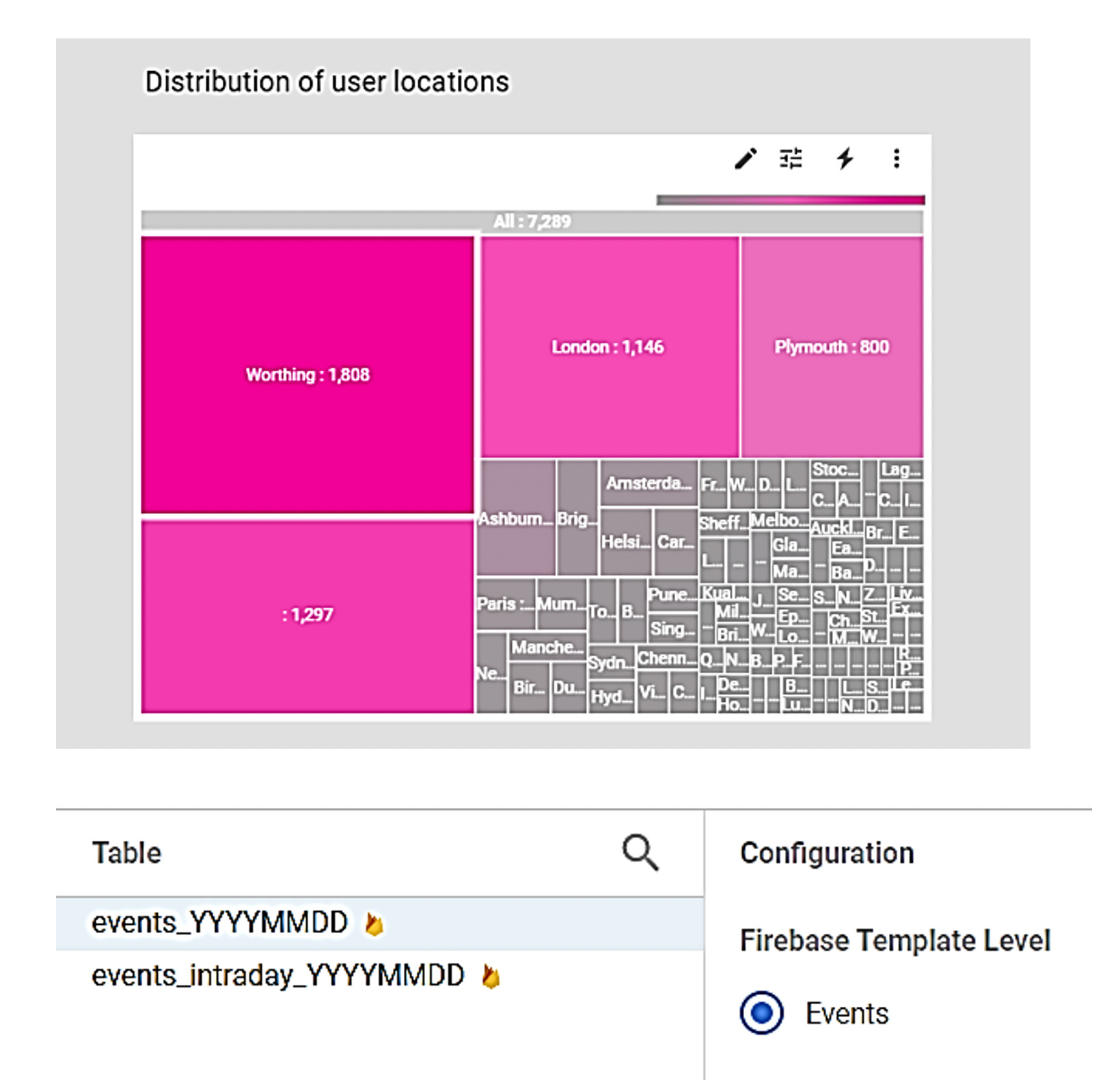

- You will see daily and intraday (real-time-ish) data.

- Integrations such as marketing cost and search data are not included in the export.

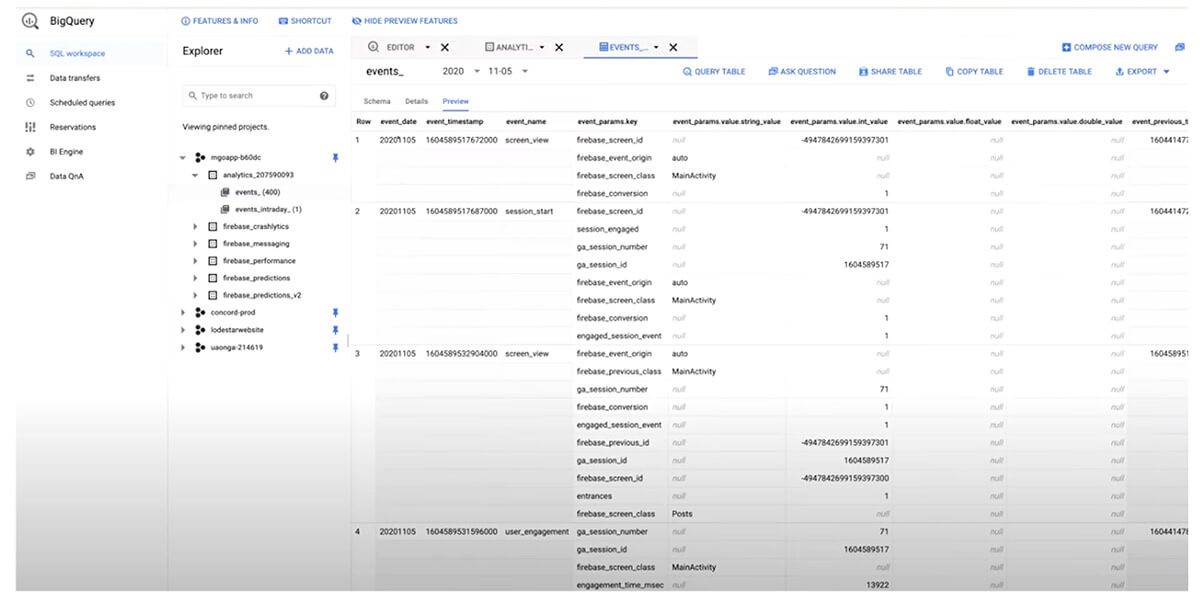

3. What to expect from your data

Key info about the schema

GA4 schema has multiple attribution scopes

- User level

- Session Level

- Event level

You will see a row per event

- GA4 BigQuery data looks like a giant excel sheet

- You can preview the data in BigQuery with no programming

There are multiple nested scopes

- Event, user, ecomms, item, geo, device type

Easy to join

- Since the schema is the same on each GA4 GBQ table, you can join multiple properties in GBQ rather than using rolled-up properties.

The schema takes some time to get used to, so it's essential to practice and test your code on the public data set or a sample of your data.

Additionally, whilst there is a disconnect between GA4 and BigQuery (dependent on reporting ID), you should reconcile your results against GA4 (where possible) to ensure you have unnested the scoped data correctly.

Learn more: [GA4] BigQuery Export schema

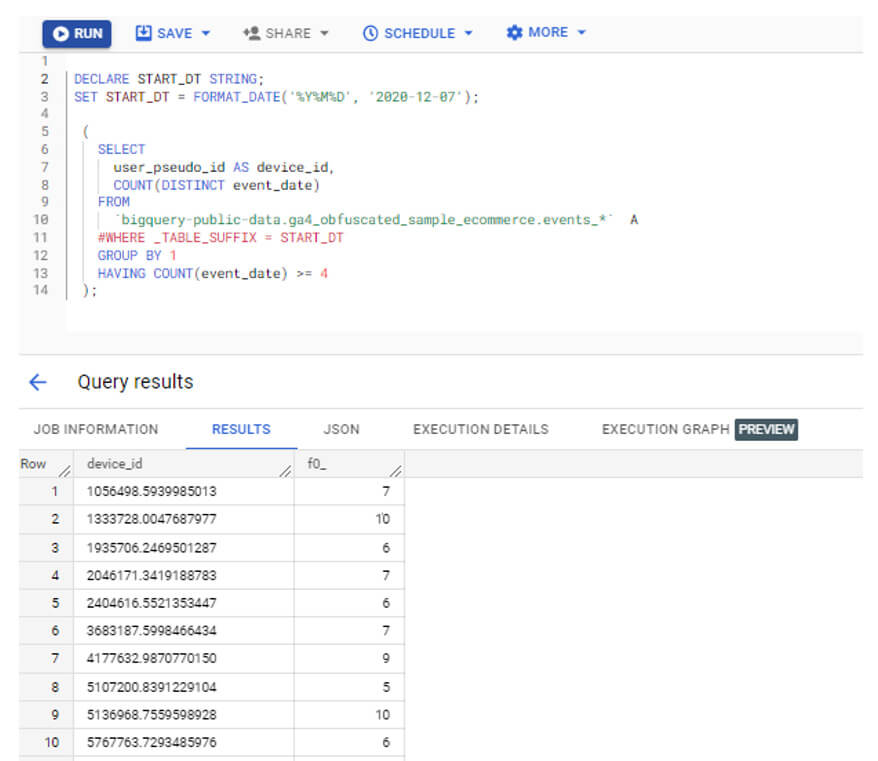

Get practicing

Google has public datasets to get you started.

BigQuery is accessed via SQL in the console, with a wealth of public datasets. You can easily create a project in GCP if you have a Google email address.

Key facts

- Remember, you will still use your allowance to run programs against public datasets.

- You can even connect Looker Studio on these tables.

- You cannot break the public datasets.

- The public GA4 table has the same schema as your GA4 property will have.

Learn more: BigQuery sample dataset for Google Analytics 4 ecommerce web development.

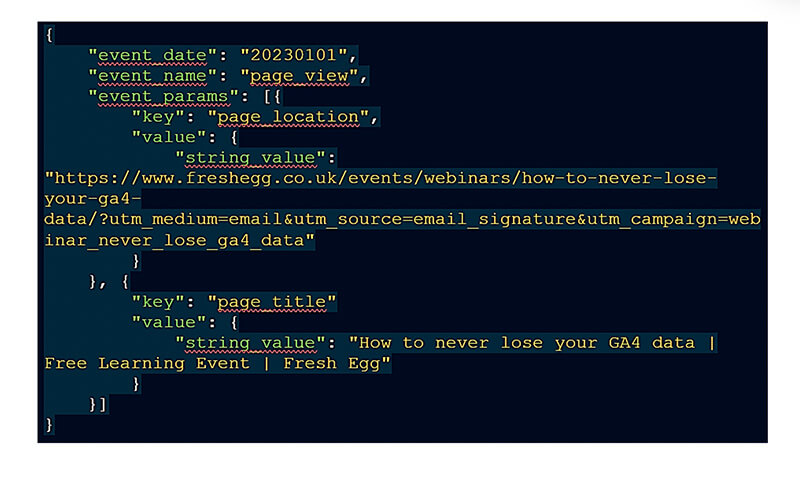

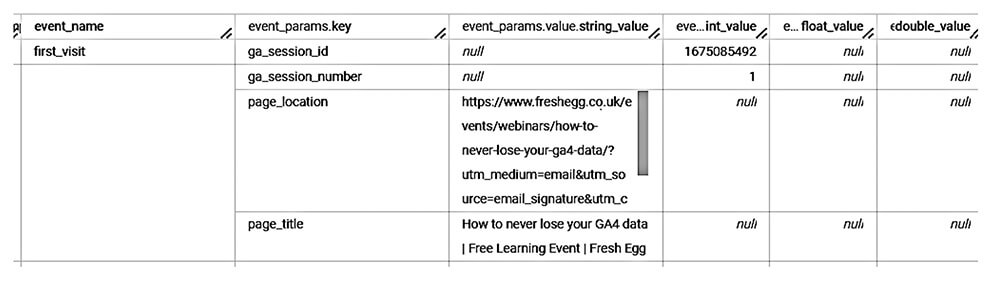

What does it look like?

GA4 data in BigQuery is nested, which can be challenging working with it initially.

Data has paired values for each event parameter has a paired string, integer, float or Boolean value. However, this means you need to unnest the data.

Key facts

- Nested data is significantly cheaper to store.

- You can unnest BigQuery data in SQL to flatten it to standard rows.

- You can edit and transform values and add reference data.

Unnesting the data

Key things to remember

- The general syntax for extracting parameters and nested values is simple, and you usually only need to change two elements, the variable type and the parameter name.

- You can unnest in the select statement or the from the statement.

- It's less complex than the UA dataset.

- You must understand your GTM and GA4 design when using this data.

4. What next with your GA4 data?

GA4 and GBQ together

|

|

Google BigQuery and Google Analytics 4 console is a sum of parts and should be used together for the best results. Some examples are:

- You need to review the performance of a page over 12 months quickly | GA4

- You need to assess trends on a page over a couple of years and want to prevent sampling or cardinality | BQ

- You want to review the number of users who have performed an event and need this on an ad-hoc basis | GA4

- You have not added this event as a custom dimension or metrics and want to review it over time | BQ

- You want to check the number of users who converted on a specific conversion | GA4

- You want to report on the number of conversions triggered before marking the event as a conversion | BQ

Connect to Looker Studio

GA4 API quota limit has put more focus on BigQuery.

Connect directly to Looker if you have very low volumes of data and require no transformation.

Build a single session view if you have to join historical data, transform data or have over 30K of events per day and connect via the BigQuery connector.

Combine data in BigQuery and then connect to Looker to speed up reporting.

It's not just Looker – BigQuery can connect to any major visualisation tool and other cloud and on-prem platforms.

Learn more: Using Looker Studio for actionable insights

Data modelling and scheduling

GCP has many tools to help you make more from your data.

- DataForm provides a modelling function which connects to Github and data lineage models.

- Scheduling allows you to automate code to run daily to update your tables and report.

- Supermetrics, Fivetran and other tools provide the functionality to import more data from sources like Facebook Business Manager.

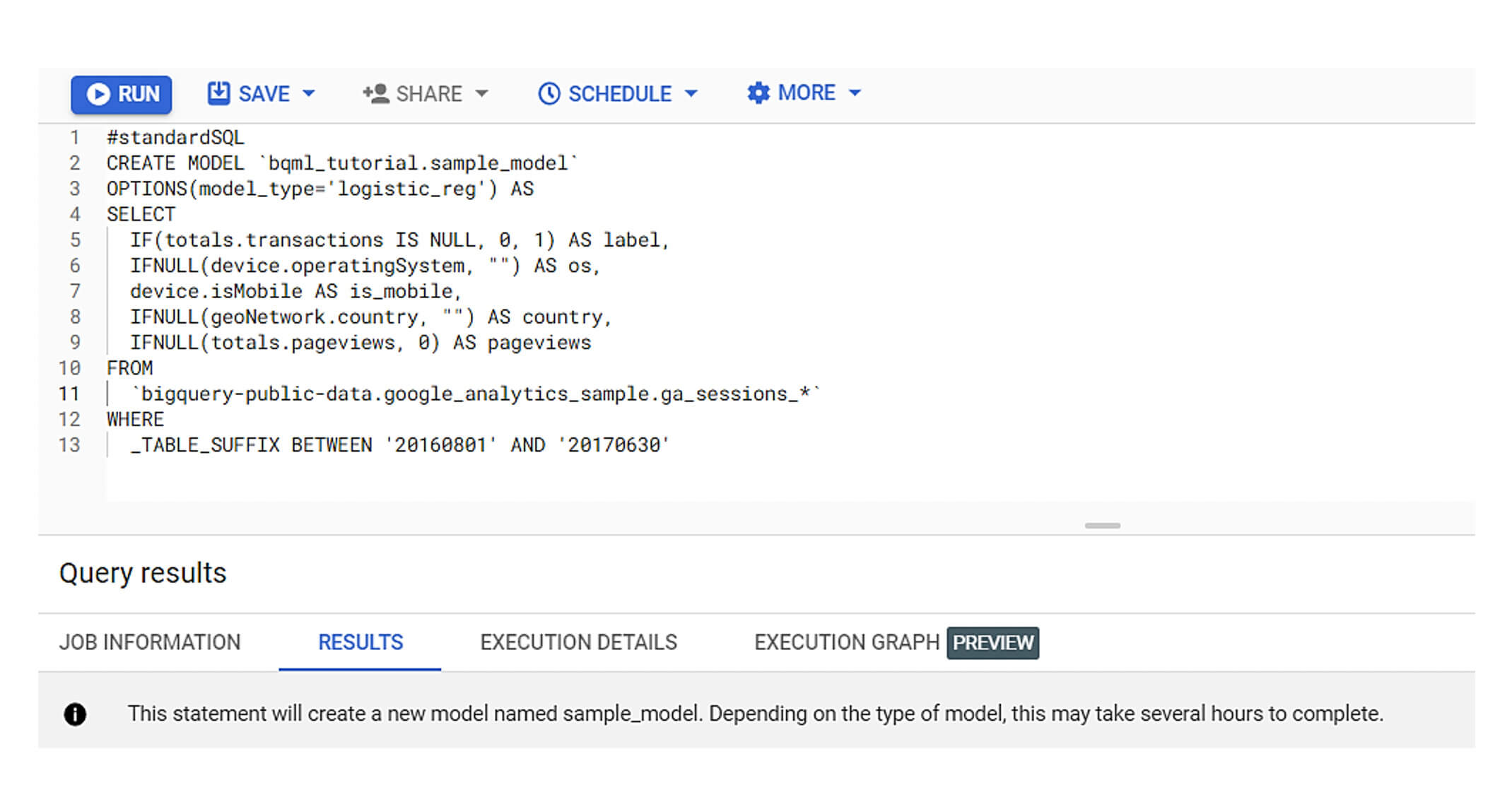

Machine learning made easy

GCP has built-in machine learning called auto mi.

- Auto mi means you and your teams can quickly train and run models without external tools or computer and data science experience.

- Results are inbuilt, so you do not need to provision more tools to run models.

- More options are always being added, and you can review the model in tools like Looker.

- There is a charge for processing and storing models, so always review pricing.

Things to remember

- GA4 BQ connection does not back run – do it today even if you don't use the data immediately.

- GCP is a scalable tool – it's not just GA4.

- GA4 BQ does take a bit of time to onboard with but with a great community to support your development.

- Using GA4 BQ data will allow you to activate your data and make it 3-dimensional.

5. What else can you do with GCP?

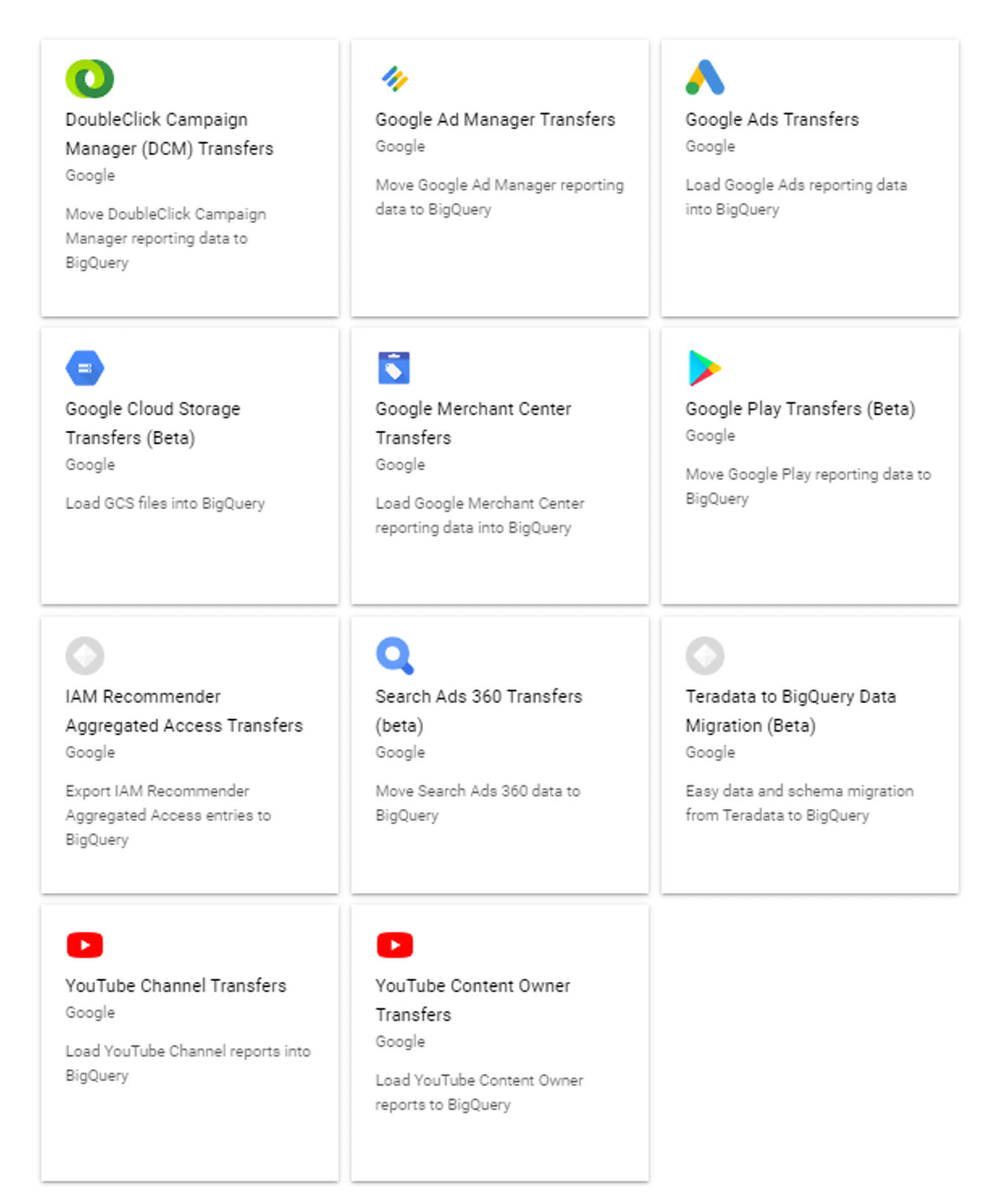

BigQuery and GCP aren't just about Google Analytics 4.

- Upload your local CSV, Google sheets, and text files - GCP provides no programming tools to add more data to BigQuery.

- Run python and other processes to connect APIs - Using tools like cloud functions allows users to update tables automatically.

- Schedule and transform data in the console - Configure SQL code to run daily without any manual processing.

- Connect other data warehouses - Create a hybrid cloud architecture and get the best out of all tools.

Save costs on data engineering

BigQuery has 11 free data transfer services which are free of charge to use.

- You can join these sources with your GA4 data.

- Connect to Looker Studio and other visualization tools.

- Connect to other cloud platforms.

- Analyze the data in BigQuery.

- The datasets are incredible for digital marketing analysts.

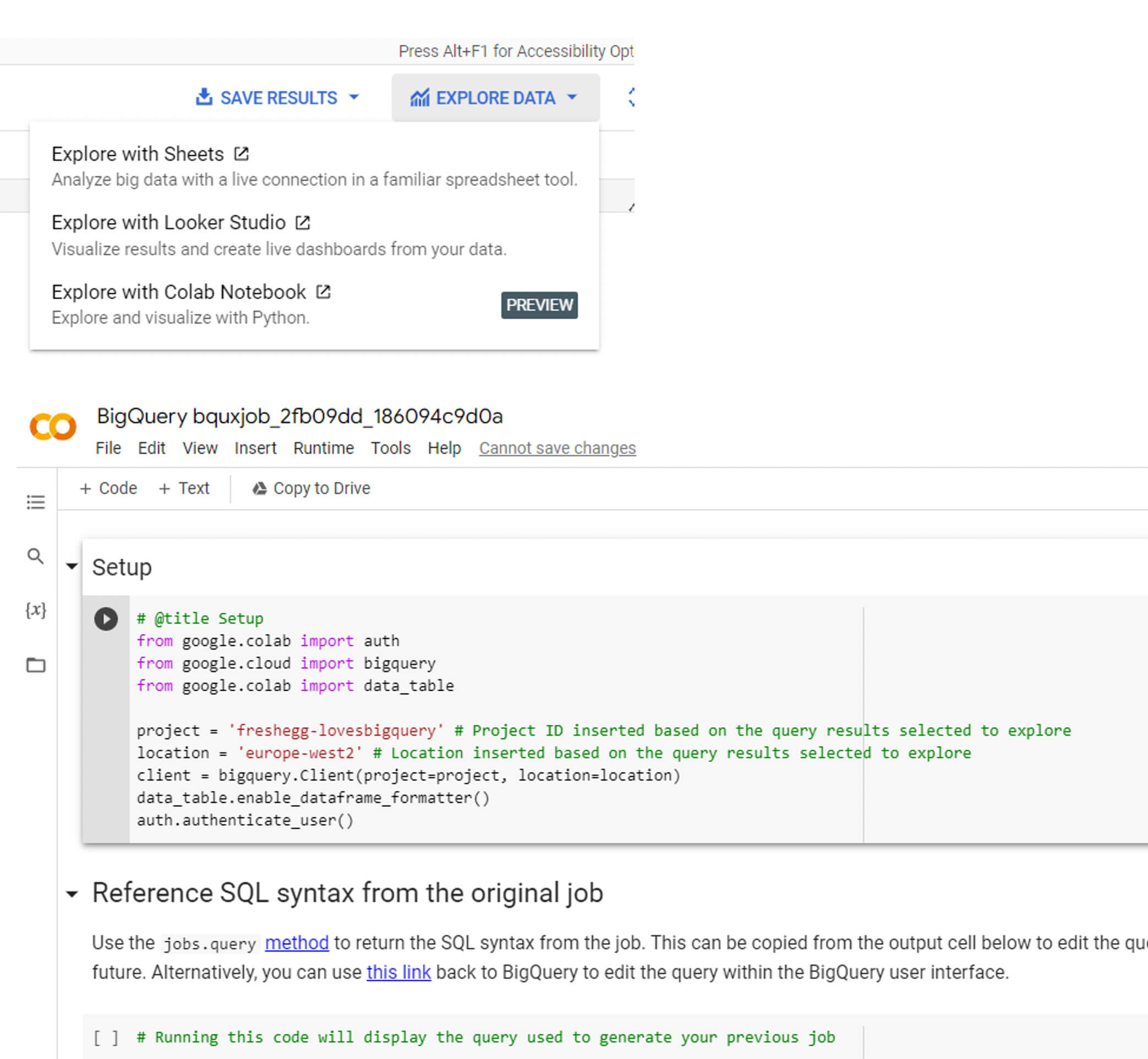

Explore data with other tools

GCP is making massive strives to create a one-stop platform for analytics and engineering.

- When you run a code in BigQuery, you can explore it with Sheets, Looker studio or Colab.

- When you select Colab, you will get pre-built code, significantly saving analytics dev time.

- Connected sheets will contain SQL and pre-built formatting and be stored as a Google Sheet in your drive.

- It all takes less than five seconds!

Summary

With your GA4 event and user level data, you can start pulling actionable insights that allow you to customise and optimise your analysis. But that’s not all. Google Cloud Platform creates a centralised marketing data warehouse or lake. When used with your CRM and marketing data, you can generate a true single customer and holistic view of your user journeys and improve marketing performance.

BigQuery does take some onboarding, but there are many tools and support out there, and we are here to support you on this journey.

Watch our learning session on How to never lose your GA4 data

Loading

Can we help you with GCP?

Tell us your needs and we'll be in touch

Related content

Do you have a challenge we can help you with?

Let's have a chat about it! Call us on 01903 285900