Five Things from Google I/O and what they mean for SEO

By Callum Grantham|24 May 2018

Every year, web developers, SEOs and the great and good across the world tune into Google I/O for the latest big reveals, insights and well-timed clarifications from everyone’s favourite search giant. While the conference doesn’t strictly focus on search, there’s always plenty of useful information for anyone in the field – and this year was no exception.

Duplex: the one that caught everyone’s attention

While it sounds suspiciously like something you’d find in the home decorating section of B & Q, Google Duplex is an AI system that integrates with Google Assistant to emancipate you from the tyranny of the functional phone call.

Hyperbole aside, the snippets Google CEO Sundar Pichai shared were impressive, showcasing Duplex’s ability to navigate the nuances of human conversation with admirable ease. The AI even includes verbal fillers (Google calls them “speech disfluencies”) such as “uhm” and “er” to create a more naturalistic conversational flow and, handily, to serve as a verbal egg timer while the neural network works out what to say next.

Like some of the other things on show at I/O, Duplex is Search adjacent in that it looks like it will use Search to provide the information to complete its essential functions. For businesses hoping to cash in on the “book an appointment” requests, it’s a good bet that Google will use Google My Business listings in the same way it has with “near me” searches on Google Assistant. From a search perspective, it’ll be interesting how it selects information for instructions more complex than booking a specified service or restaurant.

At present, Duplex only works in limited situations, but it’s easy to see how in the not-too-distant future businesses could harness Duplex in call centres and as a virtual receptionist. In the meantime, Google will be testing Duplex’s ability to take the useful information it receives over a phone call, in this case holiday opening hours, and make it available online to Google users.

Smart Displays show the limitations of voice-only search

Ever the innovator, Google is hot on the heels of Amazon’s decision to release the Echo Show – a virtual assistant with a screen – with its Smart Displays, which bring “voice and visuals together”. A bit like a phone, then. Voice search has been a huge talking point over the past couple of years, with nearly every conference speech heralding it as the Second Coming of search. So, what does the inclusion of screens with virtual assistants mean for voice search? I asked our SEO Director Mark Chalcraft what this means.

Mark said, "What this shows us is that voice-only for search only works for users up to a point, despite the hype about a revolution. In many situations, only a visual result will meet the user's need, whether that's a YouTube video, product imagery, or a map.

"The idea that voice-only devices will displace the traditional search engine is probably too simplistic - in some scenarios a voice response is fine but in others it just won't work. Think of human interactions - in scenarios where a person would refer to a visual reference to communicate information, such as a map for directions, the same principle is likely to win out in search.

"As with anything, the key to understanding how to meet user's needs (and therefore to win in search) is understanding the underlying behaviours. As yet, we don't have enough data on voice search use in the real world to know the answer to these questions."

Given the paucity of available data, right now, if you want to optimise your presence on Home or Alexa, we think it’s better to focus on creating brand skills for Alexa and Actions on Google Home , and making sure your content is useful to your audience rather than guessing at what users are searching for in between song requests.

Google’s JavaScript drive-by

Google lifted the lid on its JavaScript crawling process and how Googlebot struggles to render large amount of JavaScript quickly. What this means is that Google effectively takes a first pass at the site; rolling on past, having a look, taking in the basic details. Then once it has more rendering resources available, it takes a closer pass and makes sure it takes down all the details.

So, if you’re using a lot of client-side JavaScript, it could take longer for your website to be fully indexed and Google might miss some details on the first crawl – notably canonicals and meta data. What does that mean? Well, could take a while for your content to be indexed, resulting in less visibility in search and, potentially, lost earnings. Moreover, if Google misses canonical tags, it could end up having serious consequences for your organic visibility.

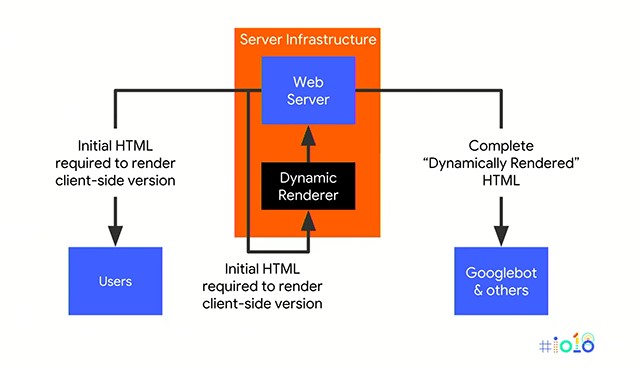

Google did propose a solution called ‘Dynamic Rendering’ which is a method to show server-side rendered content to Google while showing client-side content to users. Implementation details were fairly light on the ground, but there are two tools to help you implement Dynamic Rendering: Puppeteer and Rendertron (yes, like a Transformer). The process looks something like this:

The crux is that if you decide to take it on, you need to get this process right otherwise it could significantly damage your performance in organic search. Equally while this could dissuade people from utilising JavaScript, an important takeaway is that Google recognises the benefits of JavaScript for user experience and is trying to find a solution to allow Googlebot to reflect that in search, even if it is convoluted.

Visibility on Android

Android is introducing a few things to help people spend less time on their phones and avoid the convoluted process that comes with treating every app as a discrete ecosystem. App Actions allow you to mark your app as providing content and capabilities that meet specific user intents, which will help your app be surfaced in more places throughout Android and Google.

It’s a much more user-focused approach that echoes Google’s research around search intent and, a bit like structured data mark-up in search, helps brands stand out for areas where they can provide value to users.

Related to Actions, Slices are templates that allow you to build interactive content from your app UI to be surfaced in the Google Search app and Google Assistant. Type a search into phone your app Slices will show up and allow users to use specific functions from your app without having to load it up separately.

It’s basically a deep link but it has the potential to change the way people use apps – spending less time in the app itself and dipping in for specific functions depending on context. If it works well, it should mean a much better experience for your users.

While none of this is strictly search engine optimisation, incorporating Actions and Slices into your app infrastructure could have a big influence on your visibility and whether people continue to use your app. Moreover, it could help you focus on the specific areas where you can provide value to your customers, which should be reflected across your digital offering.

Google Lens and visual search

Google Lens was launched in 2017 but has yet to fully fulfil its ‘point and discover’ potential for search, however, new features unveiled at Google I/O suggest it’s getting closer. First up, smart text selection, whereby you can point your camera at real-life text, highlight it within Lens and then use it to search or for various other functions.

It certainly saves the neck strain from glancing between router and phone as you painstakingly copy your friend’s WI-FI code only to realise you’ve missed a seven somewhere and have to start again from the beginning. It doesn’t have a huge impact on how you search, but it certainly makes it easier to reference things on the fly.

Quicker research is also the MO of Lens’ real-time functionality which, combined with Lens being integrated into the camera on some Android models, enables you to point your camera at the world around you and uncover all manner of fun facts, like just who that eroded statue is supposed to be.

This feature was essentially already available using Photos, but these changes make it more prominent and instant, which will likely increase the number of people using it and could have a big impact on ‘I want to know’ searches - where users are exploring or researching.

Perhaps more impactful is ‘Style Match’, a feature that provides shopping suggestions based on clothing and furniture. The key thing with Style Match is that it doesn’t just go and find the exact object – although it will also do that – but other items that are similar in style. This has potentially huge implications for ecommerce brands – no doubt the principle will move beyond clothing – and highlights the importance of utilising Google Shopping .

Like many other things at Google I/O, these changes highlight the importance of optimising across Google’s many services, not just making your website search friendly. Through developments like Slices and Style Match, Google is demonstrating its continued focus on user intent, so making sure you’re focusing on the needs of your audience across all the bases will continue to be the best route to success.

Got questions about SEO? Get in touch on 01903 334807 or send us a message.